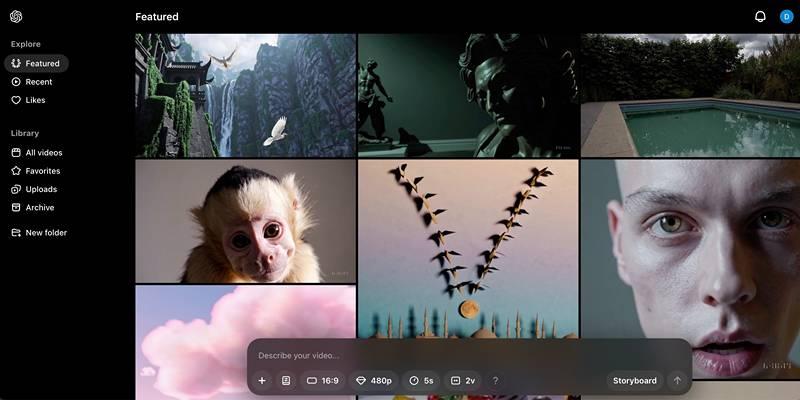

The OpenAI tool Sora is one of the most talked-about ones for making AI videos. It quickly caught the attention of creators, marketers, and digital artists alike because it can turn easy text prompts into full-fledged videos. Yet, for all its promise, most users quickly realize that Sora is far from perfect. While the tool can produce high-resolution, stylistically compelling clips, it often struggles with realism, object interaction, and complex motion.

So, what separates the eye-catching, viral Sora videos from the glitchy, uncanny outputs many users encounter? The answer often lies in how the prompt is crafted and how well the user understands the tool’s current limitations. By applying three simple but powerful strategies, users can dramatically improve the quality and realism of their Sora-generated videos.

Here are the 3 essential tips every Sora user should know to take their AI videos from awkward to awe-inspiring.

1. Minimize Object Interactions to Avoid Unnatural Motion

One of Sora’s most noticeable limitations lies in how it handles physical interactions between objects or characters. In theory, prompting a person to eat, dance, or manipulate tools should yield smooth, realistic actions. In practice, however, these interactions are often the first element to break down.

A now-popular test among AI enthusiasts is the “Will Smith eating spaghetti” prompt. While humorous, the resulting video typically fails on multiple fronts: the character doesn’t resemble Will Smith, the fork bends unnaturally, and the spaghetti behaves like a ghostly blob with no real-world physics.

Even simpler tasks—like someone opening a door, playing an instrument, or petting an animal—are rendered with awkward, disjointed movements. Sora hasn’t mastered the complexity of natural object interaction.

The Fix: Focus on Non-Interactive Scenes

Instead of trying to prompt sequences that involve detailed hand-eye coordination or fine object handling, users should lean into static or lightly animated scenarios. Wide shots, ambient camera pans, and characters walking or riding are far more likely to produce coherent results.

For instance, a prompt such as:

“A dramatic, wide-angle shot of a knight riding a horse through the countryside at sunset.”

...works well because it avoids close-up detail and complex interaction. While the horse’s gait might still seem slightly unnatural, the broader composition tends to look cinematic and visually consistent.

The key is to minimize prompts that require precise physics and instead highlight movement that’s broad, minimal, or environmental. It creates a cleaner, more believable video that better plays to Sora’s current strengths.

2. Reduce Prompt Complexity for Smoother Visual Output

A common mistake among new Sora users is the assumption that more descriptive prompts lead to better results. While this approach may work well with text-based models like ChatGPT, AI video tools operate differently. The more moving parts a prompt contains—such as multiple characters, rapid actions, or scene transitions—the more likely the video will appear jumbled or unnatural.

A great example of this is seen in popular videos like “Egypt 3099,” created using other AI tools like Kling. These videos often go viral for their polished aesthetics and atmospheric immersion. But upon closer inspection, what makes them work is their lack of movement complexity. The creators intentionally keep the motion simple—fog drifting, a person walking, or lights flickering—while emphasizing the mood and style.

The Fix: Embrace Simplicity

Sora performs best when it’s asked to generate one scene, one subject, and one primary motion. Trying to force a sequence of events, multi-character interactions, or narrative developments in a single prompt will almost always degrade the output.

Compare these two prompts:

- “Two astronauts exit a spaceship, wave at each other, jump over rocks, and plant a flag on a distant planet.”

- “A slow panning shot of an astronaut standing on a red desert planet under a hazy sky.”

The second prompt eliminates layered actions and gives Sora the breathing room it needs to focus on aesthetics, style, and rendering quality. As a best practice, users should aim to tell visual stories in pieces, breaking complex ideas into multiple prompts and combining them later through video editing tools if needed. This modular approach results in cleaner scenes, better visual flow, and far fewer errors.

3. Prioritize Style and Aesthetic Over Precise Action

Another insight many experienced Sora users share is that the tool often responds better to mood and style cues than to rigid, detailed descriptions. While users might expect it to render exact facial expressions or body gestures, Sora seems to prioritize broader emotional or stylistic themes instead.

One user tested this theory with a playful prompt:

“Show a dramatic cowboy giving a flirtatious smirk while lifting his boots.”

The result? There was no smirk or boot-lifting, but the overall tone and cinematography of a dramatic Western scene came through beautifully. The setting, lighting, and framing carried the emotional weight the user was looking for, even if the literal instructions were missed.

In another case, a user prompted:

“Using found footage to make a scary movie, show a kitten walking toward the camera in a dark alley.” Although the kitten's movement was quirky, and its direction was reversed, the stylistic execution of the horror vibe—from shaky cam to eerie lighting—was nailed almost perfectly.

The Fix: Lead With Mood, Not Micro-Motion

Rather than focusing on hyper-specific gestures or behaviors, users should describe:

- Genre and cinematic style (e.g., noir, sci-fi, horror)

- Lighting and atmosphere (e.g., moody, dreamlike, surreal)

- Camera type or motion (e.g., handheld, panning, slow zoom)

- Color palette or tone (e.g., muted, vibrant, grainy)

These cues help Sora deliver more visually compelling and emotionally resonant content, even if the action lacks photorealistic detail. This approach turns Sora into a powerful cinematic mood board generator, ideal for short-form storytelling, concept previews, or visual inspiration.

Conclusion

While OpenAI’s Sora is still evolving, users can achieve dramatically better video outputs by understanding its current strengths and limitations. By avoiding complex object interactions, simplifying prompt structures, and focusing on mood and visual style rather than precise actions, creators can produce cleaner, more compelling results. These three tips help transform Sora from a novelty tool into a practical asset for visual storytelling.